About Me

I am a physicist working on neural networks and machine learning. I am an independent researcher. Previosly, I was a member of the research staff at the MIT-IBM Watson AI Lab and IBM Research in Cambridge, MA. Before this, I was a member of the Institute for Advanced Study in Princeton.

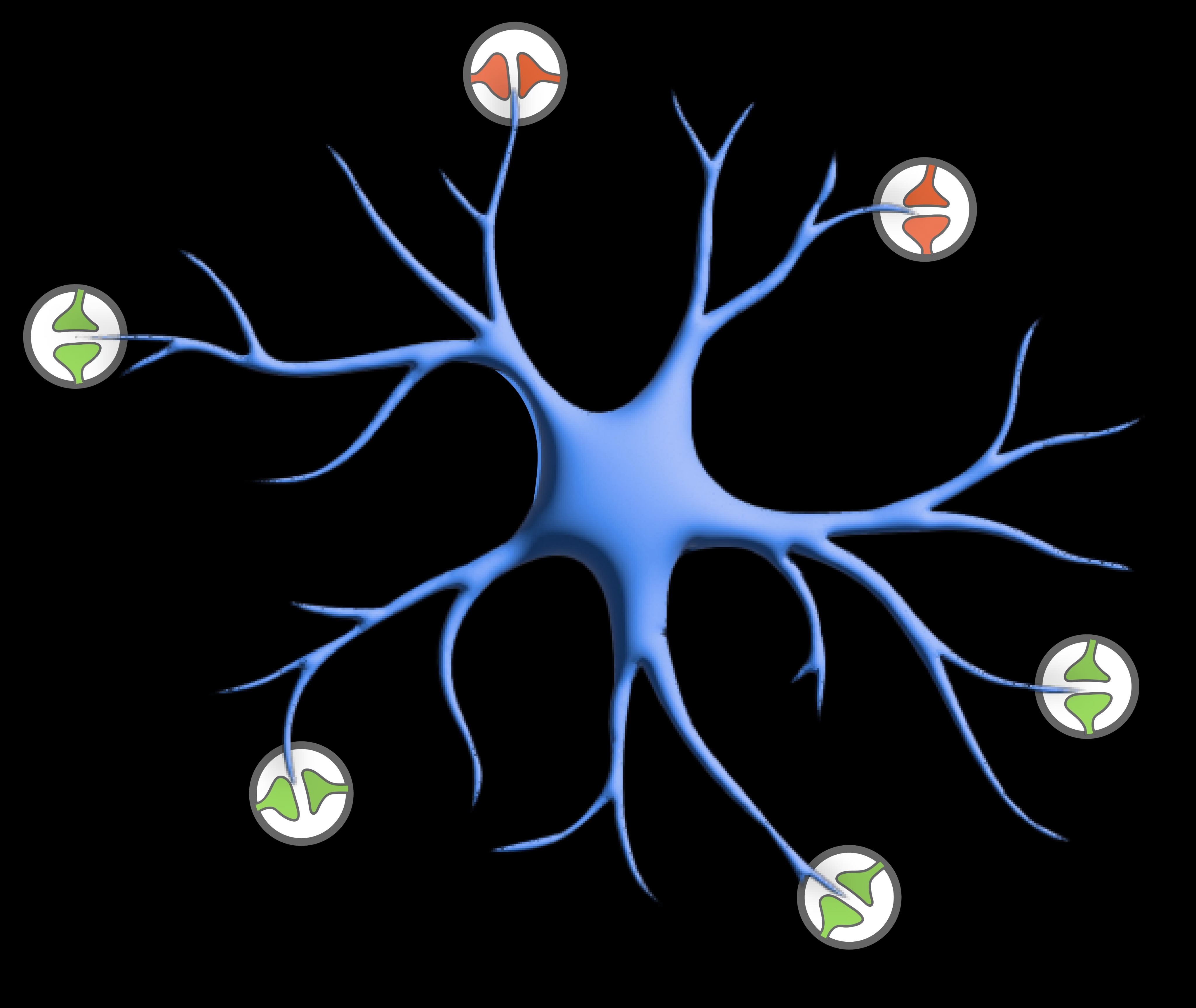

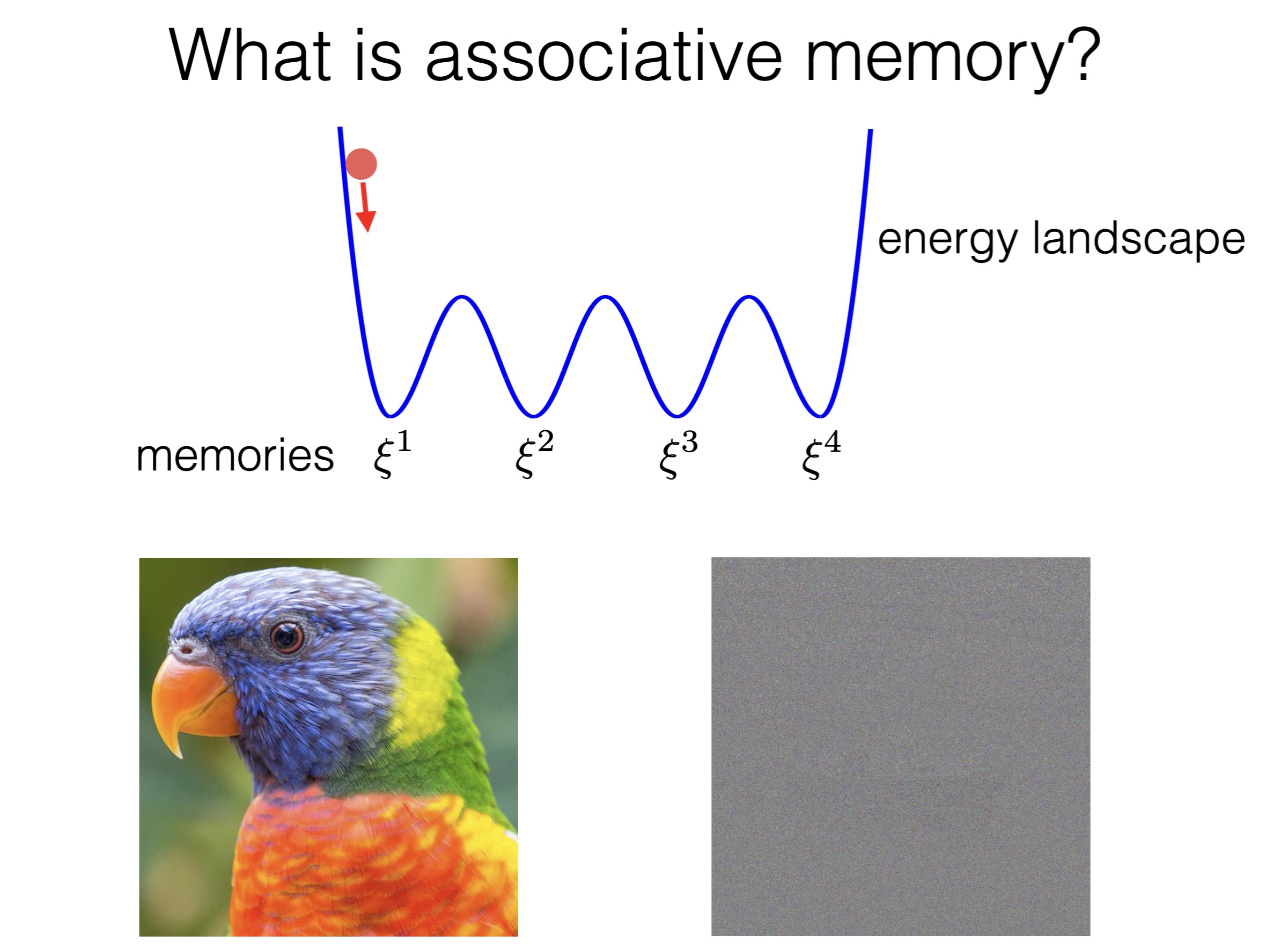

Broadly defined, my research focuses on the theoretical foundations of artificial neural networks, with particular emphasis on associative memory, energy-based models, and brain-inspired architectures. My work bridges the gap between physics, neuroscience, and AI, seeking to understand and develop algorithms that can process information as efficiently as biological neural networks.

Together with John Hopfield I developed Dense Associative Memories, which significantly increased the information storage capacity of Hopfield Networks. My research has implications for both our understanding of how biological brains store and retrieve information, and for developing more efficient AI systems.

If you want to learn more about my work please check out this recent Q&A with me, or a profile about my work.